python爬虫学习教程,用python爬取新浪微博数据

发布于2019-08-06 09:41 阅读(652) 评论(0) 点赞(4) 收藏(4)

爬取新浪微博信息,并写入csv/txt文件,文件名为目标用户id加".csv"和".txt"的形式,同时还会下载该微博原始图片(可选)。

运行环境

开发语言:python2/python3

系统: Windows/Linux/macOS

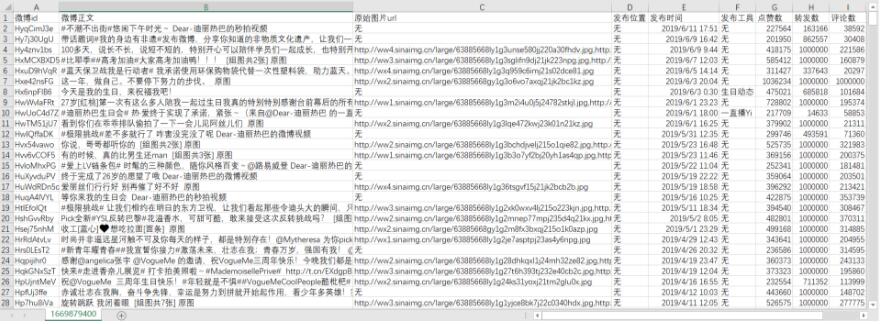

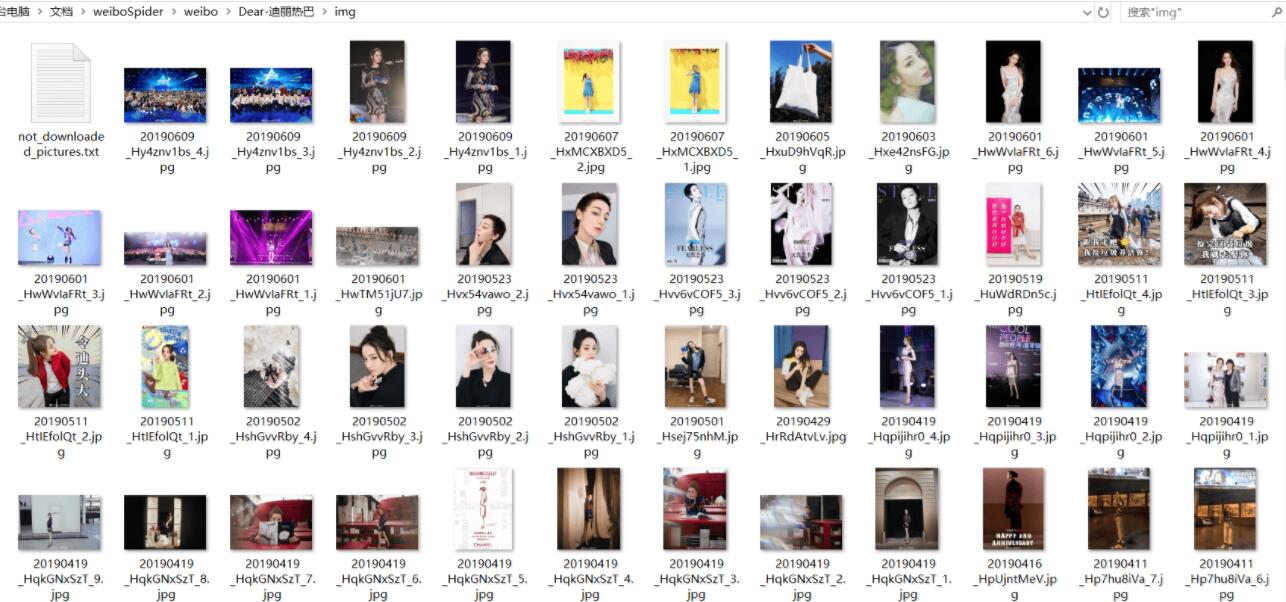

以爬取迪丽热巴的微博为例,她的微博昵称为"Dear-迪丽热巴",id为1669879400(后面会讲如何获取用户id)。我们选择爬取她的原创微博。程序会自动生成一个weibo文件夹,我们以后爬取的所有微博都被存储在这里。然后程序在该文件夹下生成一个名为"Dear-迪丽热巴"的文件夹,迪丽热巴的所有微博爬取结果都在这里。"Dear-迪丽热巴"文件夹里包含一个csv文件、一个txt文件和一个img文件夹,img文件夹用来存储下载到的图片。

csv文件结果如下所示:

txt文件结果如下所示:

下载的图片如下所示:

img文件夹

本次下载了766张图片,大小一共1.15GB,包括她原创微博中的图片和转发微博转发理由中的图片。图片名为yyyymmdd+微博id的形式,若某条微博存在多张图片,则图片名中还会包括它在微博图片中的序号。本次下载有一张图片因为超时没有下载下来,该图片url被写到了not_downloaded_pictures.txt。

源码分享:

1 ''' 2 在学习过程中有什么不懂得可以加我的 3 python学习交流扣扣qun,934109170 4 群里有不错的学习教程、开发工具与电子书籍。 5 与你分享python企业当下人才需求及怎么从零基础学习好python,和学习什么内容。 6 ''' 7 8 #!/usr/bin/env python 9 # -*- coding: UTF-8 -*- 10 11 import codecs 12 import csv 13 import os 14 import random 15 import re 16 import sys 17 import traceback 18 from collections import OrderedDict 19 from datetime import datetime, timedelta 20 from time import sleep 21 22 import requests 23 from lxml import etree 24 from tqdm import tqdm 25 26 27 class Weibo(object): 28 cookie = {'Cookie': 'your cookie'} # 将your cookie替换成自己的cookie 29 30 def __init__(self, user_id, filter=0, pic_download=0): 31 """Weibo类初始化""" 32 if not isinstance(user_id, int): 33 sys.exit(u'user_id值应为一串数字形式,请重新输入') 34 if filter != 0 and filter != 1: 35 sys.exit(u'filter值应为0或1,请重新输入') 36 if pic_download != 0 and pic_download != 1: 37 sys.exit(u'pic_download值应为0或1,请重新输入') 38 self.user_id = user_id # 用户id,即需要我们输入的数字,如昵称为"Dear-迪丽热巴"的id为1669879400 39 self.filter = filter # 取值范围为0、1,程序默认值为0,代表要爬取用户的全部微博,1代表只爬取用户的原创微博 40 self.pic_download = pic_download # 取值范围为0、1,程序默认值为0,代表不下载微博原始图片,1代表下载 41 self.nickname = '' # 用户昵称,如“Dear-迪丽热巴” 42 self.weibo_num = 0 # 用户全部微博数 43 self.got_num = 0 # 爬取到的微博数 44 self.following = 0 # 用户关注数 45 self.followers = 0 # 用户粉丝数 46 self.weibo = [] # 存储爬取到的所有微博信息 47 48 def deal_html(self, url): 49 """处理html""" 50 try: 51 html = requests.get(url, cookies=self.cookie).content 52 selector = etree.HTML(html) 53 return selector 54 except Exception as e: 55 print('Error: ', e) 56 traceback.print_exc() 57 58 def deal_garbled(self, info): 59 """处理乱码""" 60 try: 61 info = (info.xpath('string(.)').replace(u'\u200b', '').encode( 62 sys.stdout.encoding, 'ignore').decode(sys.stdout.encoding)) 63 return info 64 except Exception as e: 65 print('Error: ', e) 66 traceback.print_exc() 67 68 def get_nickname(self): 69 """获取用户昵称""" 70 try: 71 url = 'https://weibo.cn/%d/info' % (self.user_id) 72 selector = self.deal_html(url) 73 nickname = selector.xpath('//title/text()')[0] 74 self.nickname = nickname[:-3] 75 if self.nickname == u'登录 - 新' or self.nickname == u'新浪': 76 sys.exit(u'cookie错误或已过期,请按照README中方法重新获取') 77 print(u'用户昵称: ' + self.nickname) 78 except Exception as e: 79 print('Error: ', e) 80 traceback.print_exc() 81 82 def get_user_info(self, selector): 83 """获取用户昵称、微博数、关注数、粉丝数""" 84 try: 85 self.get_nickname() # 获取用户昵称 86 user_info = selector.xpath("//div[@class='tip2']/*/text()") 87 88 self.weibo_num = int(user_info[0][3:-1]) 89 print(u'微博数: ' + str(self.weibo_num)) 90 91 self.following = int(user_info[1][3:-1]) 92 print(u'关注数: ' + str(self.following)) 93 94 self.followers = int(user_info[2][3:-1]) 95 print(u'粉丝数: ' + str(self.followers)) 96 print('*' * 100) 97 except Exception as e: 98 print('Error: ', e) 99 traceback.print_exc() 100 101 def get_page_num(self, selector): 102 """获取微博总页数""" 103 try: 104 if selector.xpath("//input[@name='mp']") == []: 105 page_num = 1 106 else: 107 page_num = (int)( 108 selector.xpath("//input[@name='mp']")[0].attrib['value']) 109 return page_num 110 except Exception as e: 111 print('Error: ', e) 112 traceback.print_exc() 113 114 def get_long_weibo(self, weibo_link): 115 """获取长原创微博""" 116 try: 117 selector = self.deal_html(weibo_link) 118 info = selector.xpath("//div[@class='c']")[1] 119 wb_content = self.deal_garbled(info) 120 wb_time = info.xpath("//span[@class='ct']/text()")[0] 121 weibo_content = wb_content[wb_content.find(':') + 122 1:wb_content.rfind(wb_time)] 123 return weibo_content 124 except Exception as e: 125 print('Error: ', e) 126 traceback.print_exc() 127 128 def get_original_weibo(self, info, weibo_id): 129 """获取原创微博""" 130 try: 131 weibo_content = self.deal_garbled(info) 132 weibo_content = weibo_content[:weibo_content.rfind(u'赞')] 133 a_text = info.xpath('div//a/text()') 134 if u'全文' in a_text: 135 weibo_link = 'https://weibo.cn/comment/' + weibo_id 136 wb_content = self.get_long_weibo(weibo_link) 137 if wb_content: 138 weibo_content = wb_content 139 return weibo_content 140 except Exception as e: 141 print('Error: ', e) 142 traceback.print_exc() 143 144 def get_long_retweet(self, weibo_link): 145 """获取长转发微博""" 146 try: 147 wb_content = self.get_long_weibo(weibo_link) 148 weibo_content = wb_content[:wb_content.rfind(u'原文转发')] 149 return weibo_content 150 except Exception as e: 151 print('Error: ', e) 152 traceback.print_exc() 153 154 def get_retweet(self, info, weibo_id): 155 """获取转发微博""" 156 try: 157 original_user = info.xpath("div/span[@class='cmt']/a/text()") 158 if not original_user: 159 wb_content = u'转发微博已被删除' 160 return wb_content 161 else: 162 original_user = original_user[0] 163 wb_content = self.deal_garbled(info) 164 wb_content = wb_content[wb_content.find(':') + 165 1:wb_content.rfind(u'赞')] 166 wb_content = wb_content[:wb_content.rfind(u'赞')] 167 a_text = info.xpath('div//a/text()') 168 if u'全文' in a_text: 169 weibo_link = 'https://weibo.cn/comment/' + weibo_id 170 weibo_content = self.get_long_retweet(weibo_link) 171 if weibo_content: 172 wb_content = weibo_content 173 retweet_reason = self.deal_garbled(info.xpath('div')[-1]) 174 retweet_reason = retweet_reason[:retweet_reason.rindex(u'赞')] 175 wb_content = (retweet_reason + '\n' + u'原始用户: ' + original_user + 176 '\n' + u'转发内容: ' + wb_content) 177 return wb_content 178 except Exception as e: 179 print('Error: ', e) 180 traceback.print_exc() 181 182 def is_original(self, info): 183 """判断微博是否为原创微博""" 184 is_original = info.xpath("div/span[@class='cmt']") 185 if len(is_original) > 3: 186 return False 187 else: 188 return True 189 190 def get_weibo_content(self, info, is_original): 191 """获取微博内容""" 192 try: 193 weibo_id = info.xpath('@id')[0][2:] 194 if is_original: 195 weibo_content = self.get_original_weibo(info, weibo_id) 196 else: 197 weibo_content = self.get_retweet(info, weibo_id) 198 print(weibo_content) 199 return weibo_content 200 except Exception as e: 201 print('Error: ', e) 202 traceback.print_exc() 203 204 def get_publish_place(self, info): 205 """获取微博发布位置""" 206 try: 207 div_first = info.xpath('div')[0] 208 a_list = div_first.xpath('a') 209 publish_place = u'无' 210 for a in a_list: 211 if ('place.weibo.com' in a.xpath('@href')[0] 212 and a.xpath('text()')[0] == u'显示地图'): 213 weibo_a = div_first.xpath("span[@class='ctt']/a") 214 if len(weibo_a) >= 1: 215 publish_place = weibo_a[-1] 216 if (u'视频' == div_first.xpath( 217 "span[@class='ctt']/a/text()")[-1][-2:]): 218 if len(weibo_a) >= 2: 219 publish_place = weibo_a[-2] 220 else: 221 publish_place = u'无' 222 publish_place = self.deal_garbled(publish_place) 223 break 224 print(u'微博发布位置: ' + publish_place) 225 return publish_place 226 except Exception as e: 227 print('Error: ', e) 228 traceback.print_exc() 229 230 def get_publish_time(self, info): 231 """获取微博发布时间""" 232 try: 233 str_time = info.xpath("div/span[@class='ct']") 234 str_time = self.deal_garbled(str_time[0]) 235 publish_time = str_time.split(u'来自')[0] 236 if u'刚刚' in publish_time: 237 publish_time = datetime.now().strftime('%Y-%m-%d %H:%M') 238 elif u'分钟' in publish_time: 239 minute = publish_time[:publish_time.find(u'分钟')] 240 minute = timedelta(minutes=int(minute)) 241 publish_time = (datetime.now() - 242 minute).strftime('%Y-%m-%d %H:%M') 243 elif u'今天' in publish_time: 244 today = datetime.now().strftime('%Y-%m-%d') 245 time = publish_time[3:] 246 publish_time = today + ' ' + time 247 elif u'月' in publish_time: 248 year = datetime.now().strftime('%Y') 249 month = publish_time[0:2] 250 day = publish_time[3:5] 251 time = publish_time[7:12] 252 publish_time = year + '-' + month + '-' + day + ' ' + time 253 else: 254 publish_time = publish_time[:16] 255 print(u'微博发布时间: ' + publish_time) 256 return publish_time 257 except Exception as e: 258 print('Error: ', e) 259 traceback.print_exc() 260 261 def get_publish_tool(self, info): 262 """获取微博发布工具""" 263 try: 264 str_time = info.xpath("div/span[@class='ct']") 265 str_time = self.deal_garbled(str_time[0]) 266 if len(str_time.split(u'来自')) > 1: 267 publish_tool = str_time.split(u'来自')[1] 268 else: 269 publish_tool = u'无' 270 print(u'微博发布工具: ' + publish_tool) 271 return publish_tool 272 except Exception as e: 273 print('Error: ', e) 274 traceback.print_exc() 275 276 def get_weibo_footer(self, info): 277 """获取微博点赞数、转发数、评论数""" 278 try: 279 footer = {} 280 pattern = r'\d+' 281 str_footer = info.xpath('div')[-1] 282 str_footer = self.deal_garbled(str_footer) 283 str_footer = str_footer[str_footer.rfind(u'赞'):] 284 weibo_footer = re.findall(pattern, str_footer, re.M) 285 286 up_num = int(weibo_footer[0]) 287 print(u'点赞数: ' + str(up_num)) 288 footer['up_num'] = up_num 289 290 retweet_num = int(weibo_footer[1]) 291 print(u'转发数: ' + str(retweet_num)) 292 footer['retweet_num'] = retweet_num 293 294 comment_num = int(weibo_footer[2]) 295 print(u'评论数: ' + str(comment_num)) 296 footer['comment_num'] = comment_num 297 return footer 298 except Exception as e: 299 print('Error: ', e) 300 traceback.print_exc() 301 302 def extract_picture_urls(self, info, weibo_id): 303 """提取微博原始图片url""" 304 try: 305 a_list = info.xpath('div/a/@href') 306 first_pic = 'https://weibo.cn/mblog/pic/' + weibo_id + '?rl=0' 307 all_pic = 'https://weibo.cn/mblog/picAll/' + weibo_id + '?rl=1' 308 if first_pic in a_list: 309 if all_pic in a_list: 310 selector = self.deal_html(all_pic) 311 preview_picture_list = selector.xpath('//img/@src') 312 picture_list = [ 313 p.replace('/thumb180/', '/large/') 314 for p in preview_picture_list 315 ] 316 picture_urls = ','.join(picture_list) 317 else: 318 if info.xpath('.//img/@src'): 319 preview_picture = info.xpath('.//img/@src')[-1] 320 picture_urls = preview_picture.replace( 321 '/wap180/', '/large/') 322 else: 323 sys.exit( 324 u"爬虫微博可能被设置成了'不显示图片',请前往" 325 u"'https://weibo.cn/account/customize/pic',修改为'显示'" 326 ) 327 else: 328 picture_urls = '无' 329 return picture_urls 330 except Exception as e: 331 print('Error: ', e) 332 traceback.print_exc() 333 334 def get_picture_urls(self, info, is_original): 335 """获取微博原始图片url""" 336 try: 337 weibo_id = info.xpath('@id')[0][2:] 338 picture_urls = {} 339 if is_original: 340 original_pictures = self.extract_picture_urls(info, weibo_id) 341 picture_urls['original_pictures'] = original_pictures 342 if not self.filter: 343 picture_urls['retweet_pictures'] = '无' 344 else: 345 retweet_url = info.xpath("div/a[@class='cc']/@href")[0] 346 retweet_id = retweet_url.split('/')[-1].split('?')[0] 347 retweet_pictures = self.extract_picture_urls(info, retweet_id) 348 picture_urls['retweet_pictures'] = retweet_pictures 349 a_list = info.xpath('div[last()]/a/@href') 350 original_picture = '无' 351 for a in a_list: 352 if a.endswith(('.gif', '.jpeg', '.jpg', '.png')): 353 original_picture = a 354 break 355 picture_urls['original_pictures'] = original_picture 356 return picture_urls 357 except Exception as e: 358 print('Error: ', e) 359 traceback.print_exc() 360 361 def download_pic(self, url, pic_path): 362 """下载单张图片""" 363 try: 364 p = requests.get(url) 365 with open(pic_path, 'wb') as f: 366 f.write(p.content) 367 except Exception as e: 368 error_file = self.get_filepath( 369 'img') + os.sep + 'not_downloaded_pictures.txt' 370 with open(error_file, 'ab') as f: 371 url = url + '\n' 372 f.write(url.encode(sys.stdout.encoding)) 373 print('Error: ', e) 374 traceback.print_exc() 375 376 def download_pictures(self): 377 """下载微博图片""" 378 try: 379 print(u'即将进行图片下载') 380 img_dir = self.get_filepath('img') 381 for w in tqdm(self.weibo, desc=u'图片下载进度'): 382 if w['original_pictures'] != '无': 383 pic_prefix = w['publish_time'][:11].replace( 384 '-', '') + '_' + w['id'] 385 if ',' in w['original_pictures']: 386 w['original_pictures'] = w['original_pictures'].split( 387 ',') 388 for j, url in enumerate(w['original_pictures']): 389 pic_suffix = url[url.rfind('.'):] 390 pic_name = pic_prefix + '_' + str(j + 391 1) + pic_suffix 392 pic_path = img_dir + os.sep + pic_name 393 self.download_pic(url, pic_path) 394 else: 395 pic_suffix = w['original_pictures'][ 396 w['original_pictures'].rfind('.'):] 397 pic_name = pic_prefix + pic_suffix 398 pic_path = img_dir + os.sep + pic_name 399 self.download_pic(w['original_pictures'], pic_path) 400 print(u'图片下载完毕,保存路径:') 401 print(img_dir) 402 except Exception as e: 403 print('Error: ', e) 404 traceback.print_exc() 405 406 def get_one_weibo(self, info): 407 """获取一条微博的全部信息""" 408 try: 409 weibo = OrderedDict() 410 is_original = self.is_original(info) 411 if (not self.filter) or is_original: 412 weibo['id'] = info.xpath('@id')[0][2:] 413 weibo['content'] = self.get_weibo_content(info, 414 is_original) # 微博内容 415 picture_urls = self.get_picture_urls(info, is_original) 416 weibo['original_pictures'] = picture_urls[ 417 'original_pictures'] # 原创图片url 418 if not self.filter: 419 weibo['retweet_pictures'] = picture_urls[ 420 'retweet_pictures'] # 转发图片url 421 weibo['original'] = is_original # 是否原创微博 422 weibo['publish_place'] = self.get_publish_place(info) # 微博发布位置 423 weibo['publish_time'] = self.get_publish_time(info) # 微博发布时间 424 weibo['publish_tool'] = self.get_publish_tool(info) # 微博发布工具 425 footer = self.get_weibo_footer(info) 426 weibo['up_num'] = footer['up_num'] # 微博点赞数 427 weibo['retweet_num'] = footer['retweet_num'] # 转发数 428 weibo['comment_num'] = footer['comment_num'] # 评论数 429 else: 430 weibo = None 431 return weibo 432 except Exception as e: 433 print('Error: ', e) 434 traceback.print_exc() 435 436 def get_one_page(self, page): 437 """获取第page页的全部微博""" 438 try: 439 url = 'https://weibo.cn/u/%d?page=%d' % (self.user_id, page) 440 selector = self.deal_html(url) 441 info = selector.xpath("//div[@class='c']") 442 is_exist = info[0].xpath("div/span[@class='ctt']") 443 if is_exist: 444 for i in range(0, len(info) - 2): 445 weibo = self.get_one_weibo(info[i]) 446 if weibo: 447 self.weibo.append(weibo) 448 self.got_num += 1 449 print('-' * 100) 450 except Exception as e: 451 print('Error: ', e) 452 traceback.print_exc() 453 454 def get_filepath(self, type): 455 """获取结果文件路径""" 456 try: 457 file_dir = os.path.split(os.path.realpath( 458 __file__))[0] + os.sep + 'weibo' + os.sep + self.nickname 459 if type == 'img': 460 file_dir = file_dir + os.sep + 'img' 461 if not os.path.isdir(file_dir): 462 os.makedirs(file_dir) 463 if type == 'img': 464 return file_dir 465 file_path = file_dir + os.sep + '%d' % self.user_id + '.' + type 466 return file_path 467 except Exception as e: 468 print('Error: ', e) 469 traceback.print_exc() 470 471 def write_csv(self, wrote_num): 472 """将爬取的信息写入csv文件""" 473 try: 474 result_headers = [ 475 '微博id', 476 '微博正文', 477 '原始图片url', 478 '发布位置', 479 '发布时间', 480 '发布工具', 481 '点赞数', 482 '转发数', 483 '评论数', 484 ] 485 if not self.filter: 486 result_headers.insert(3, '被转发微博原始图片url') 487 result_headers.insert(4, '是否为原创微博') 488 result_data = [w.values() for w in self.weibo][wrote_num:] 489 if sys.version < '3': # python2.x 490 reload(sys) 491 sys.setdefaultencoding('utf-8') 492 with open(self.get_filepath('csv'), 'ab') as f: 493 f.write(codecs.BOM_UTF8) 494 writer = csv.writer(f) 495 if wrote_num == 0: 496 writer.writerows([result_headers]) 497 writer.writerows(result_data) 498 else: # python3.x 499 with open(self.get_filepath('csv'), 500 'a', 501 encoding='utf-8-sig', 502 newline='') as f: 503 writer = csv.writer(f) 504 if wrote_num == 0: 505 writer.writerows([result_headers]) 506 writer.writerows(result_data) 507 print(u'%d条微博写入csv文件完毕,保存路径:' % self.got_num) 508 print(self.get_filepath('csv')) 509 except Exception as e: 510 print('Error: ', e) 511 traceback.print_exc() 512 513 def write_txt(self, wrote_num): 514 """将爬取的信息写入txt文件""" 515 try: 516 temp_result = [] 517 if wrote_num == 0: 518 if self.filter: 519 result_header = u'\n\n原创微博内容: \n' 520 else: 521 result_header = u'\n\n微博内容: \n' 522 result_header = (u'用户信息\n用户昵称:' + self.nickname + u'\n用户id: ' + 523 str(self.user_id) + u'\n微博数: ' + 524 str(self.weibo_num) + u'\n关注数: ' + 525 str(self.following) + u'\n粉丝数: ' + 526 str(self.followers) + result_header) 527 temp_result.append(result_header) 528 for i, w in enumerate(self.weibo[wrote_num:]): 529 temp_result.append( 530 str(wrote_num + i + 1) + ':' + w['content'] + '\n' + 531 u'微博位置: ' + w['publish_place'] + '\n' + u'发布时间: ' + 532 w['publish_time'] + '\n' + u'点赞数: ' + str(w['up_num']) + 533 u' 转发数: ' + str(w['retweet_num']) + u' 评论数: ' + 534 str(w['comment_num']) + '\n' + u'发布工具: ' + 535 w['publish_tool'] + '\n\n') 536 result = ''.join(temp_result) 537 with open(self.get_filepath('txt'), 'ab') as f: 538 f.write(result.encode(sys.stdout.encoding)) 539 print(u'%d条微博写入txt文件完毕,保存路径:' % self.got_num) 540 print(self.get_filepath('txt')) 541 except Exception as e: 542 print('Error: ', e) 543 traceback.print_exc() 544 545 def write_file(self, wrote_num): 546 """写文件""" 547 if self.got_num > wrote_num: 548 self.write_csv(wrote_num) 549 self.write_txt(wrote_num) 550 551 def get_weibo_info(self): 552 """获取微博信息""" 553 try: 554 url = 'https://weibo.cn/u/%d' % (self.user_id) 555 selector = self.deal_html(url) 556 self.get_user_info(selector) # 获取用户昵称、微博数、关注数、粉丝数 557 page_num = self.get_page_num(selector) # 获取微博总页数 558 wrote_num = 0 559 page1 = 0 560 random_pages = random.randint(1, 5) 561 for page in tqdm(range(1, page_num + 1), desc=u'进度'): 562 self.get_one_page(page) # 获取第page页的全部微博 563 564 if page % 20 == 0: # 每爬20页写入一次文件 565 self.write_file(wrote_num) 566 wrote_num = self.got_num 567 568 # 通过加入随机等待避免被限制。爬虫速度过快容易被系统限制(一段时间后限 569 # 制会自动解除),加入随机等待模拟人的操作,可降低被系统限制的风险。默 570 # 认是每爬取1到5页随机等待6到10秒,如果仍然被限,可适当增加sleep时间 571 if page - page1 == random_pages and page < page_num: 572 sleep(random.randint(6, 10)) 573 page1 = page 574 random_pages = random.randint(1, 5) 575 576 self.write_file(wrote_num) # 将剩余不足20页的微博写入文件 577 if not self.filter: 578 print(u'共爬取' + str(self.got_num) + u'条微博') 579 else: 580 print(u'共爬取' + str(self.got_num) + u'条原创微博') 581 except Exception as e: 582 print('Error: ', e) 583 traceback.print_exc() 584 585 def start(self): 586 """运行爬虫""" 587 try: 588 self.get_weibo_info() 589 print(u'信息抓取完毕') 590 print('*' * 100) 591 if self.pic_download == 1: 592 self.download_pictures() 593 except Exception as e: 594 print('Error: ', e) 595 traceback.print_exc() 596 597 598 def main(): 599 try: 600 # 使用实例,输入一个用户id,所有信息都会存储在wb实例中 601 user_id = 1669879400 # 可以改成任意合法的用户id(爬虫的微博id除外) 602 filter = 1 # 值为0表示爬取全部微博(原创微博+转发微博),值为1表示只爬取原创微博 603 pic_download = 1 # 值为0代表不下载微博原始图片,1代表下载微博原始图片 604 wb = Weibo(user_id, filter, pic_download) # 调用Weibo类,创建微博实例wb 605 wb.start() # 爬取微博信息 606 print(u'用户昵称: ' + wb.nickname) 607 print(u'全部微博数: ' + str(wb.weibo_num)) 608 print(u'关注数: ' + str(wb.following)) 609 print(u'粉丝数: ' + str(wb.followers)) 610 if wb.weibo: 611 print(u'最新/置顶 微博为: ' + wb.weibo[0]['content']) 612 print(u'最新/置顶 微博位置: ' + wb.weibo[0]['publish_place']) 613 print(u'最新/置顶 微博发布时间: ' + wb.weibo[0]['publish_time']) 614 print(u'最新/置顶 微博获得赞数: ' + str(wb.weibo[0]['up_num'])) 615 print(u'最新/置顶 微博获得转发数: ' + str(wb.weibo[0]['retweet_num'])) 616 print(u'最新/置顶 微博获得评论数: ' + str(wb.weibo[0]['comment_num'])) 617 print(u'最新/置顶 微博发布工具: ' + wb.weibo[0]['publish_tool']) 618 except Exception as e: 619 print('Error: ', e) 620 traceback.print_exc() 621 622 623 if __name__ == '__main__': 624 main()

注意事项

1.user_id不能为爬虫微博的user_id。因为要爬微博信息,必须先登录到某个微博账号,此账号我们姑且称为爬虫微博。爬虫微博访问自己的页面和访问其他用户的页面,得到的网页格式不同,所以无法爬取自己的微博信息;

2.cookie有期限限制,超过有效期需重新更新cookie。

所属网站分类: 技术文章 > 博客

作者:磨子舒

链接:https://www.pythonheidong.com/blog/article/7119/f8f1da4ad6ea9c7dc9c1/

来源:python黑洞网

任何形式的转载都请注明出处,如有侵权 一经发现 必将追究其法律责任

昵称:

评论内容:(最多支持255个字符)

---无人问津也好,技不如人也罢,你都要试着安静下来,去做自己该做的事,而不是让内心的烦躁、焦虑,坏掉你本来就不多的热情和定力